2022-08-08

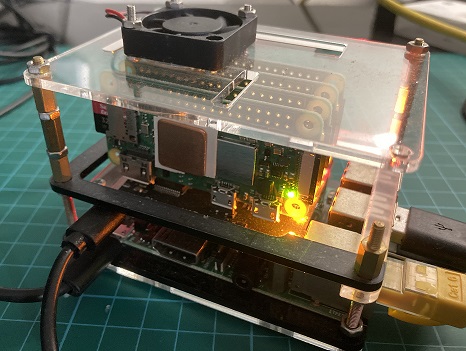

High Performance Raspberry Pi Cluster, part 2

In the part 1 of this post, I write about installation and basic configuration (clock, voltage, video, wifi, etc) of the controller and each nodes. Now the controller and each of the nodes is working it is time to proceed to the next step.

Up until now, the controller and the nodes is just a bunch of Raspberry Pi or Raspberry Pi Zeros that connected together but each run separatately. It is not running as a cluster yet. Now we will continue to make a real computer cluster.

First power up the controller and each of the nodes if you have not done so. Remember that you can use the following command to turn on the nodes and the fan from the controller:

cnat$clusterctrl fan on

cnat$clusterctrl onWe continue to setup SSH to connect to each node. We will use the same key to connect to all nodes.

If you previously setup SSH key for each node, you can use this command on each node to remove them (after you log in to each node obviously). This is useful if you somehow messed up on the following steps, or just want to use new key. as usual, replace X with the node number.

pX$ ssh-keygen -f "/home/anton/.ssh/known_hosts" -R "172.19.181.X"We start by creating a new SSH key in the controller. You can choose a passphrase, or empty passprase (If you choose not to key in passphrase).

cnat$ ssh-keygen -t rsa -b 4096Show the [key] to the screen and copy it somewhere for the next step.

cnat$ cat ~/.ssh/id_rsa.pubThe following step is to configure the SSH config so that you can login using the name (p1-p4) instead of IP address.

cnat$ nano ~/.ssh/configcopy the following to the file, replacing [usr] with the username:

Host p1

Hostname 172.19.181.1

User [usr]

Host p2

Hostname 172.19.181.2

User [usr]

Host p3

Hostname 172.19.181.3

User [usr]

Host p4

Hostname 172.19.181.4

User [usr]Login to Node X:

cnat$ ssh 172.19.181.Xand setup SSH on each node. Remember to replace the [key] from the previous step.

pX$ mkdir -p .ssh

pX$ echo [key] >> ~/.ssh/authorized_keysSince we need to restart to make the changes take effect, we take the opportunity to update each node. If you have not done so to the controller, you can do it as well.

pX$ sudo apt update && sudo apt upgrade -y && sudo rebootJust in case if it is not configured yet, we need to setup the date time update for all controller and nodes. This is important to make sure the controller and time of each node are synchronized for them to run as a cluster. If you have not setup the controller and nodes to use regional setting and timezon, it is time to do it now using sudo raspi-config comand.

$ sudo apt install -y ntpdateThe next step is to setup NFS shared folder. The following part of this posts will rely on this steps. For this step, I recommend using a separate USB flash drive from reputable brand and put it in the controller's USB port. Use the following commands to check the flash drive is connected:

cnat$ ls -la /dev

cnat$ lsblkFormat the drive (make sure format the correct drive), in this example it is /dev/sda1:

cnat$ sudo mkfs.ext4 /dev/sda1Then mount the flash drive, set the permission and to let it automatically mount at boot.

Note that this example set the permission of the NFS drive to everyone for testing purpose only.

You should set the proper permission for the NFS share with your username.

Note that the later part of this post assume that you mount the NFS storage at /media/Storage.

cnat$ sudo mkdir /media/Storage

cnat$ sudo chown nobody:nobody -R /media/Storage

cnat$ sudo chmod -R 777 /media/StorageUse this command to check the UUID of drive, copy the UUID for next step:

cnat$ blkidAdd the following line to the bottom of the fstab file:

UUID=[UUID] /media/Storage ext4 defaults 0 2Ensure that the NFS server is installed on your controller:

cnat$ sudo apt install -y nfs-kernel-serverAnd share the USB storage by adding the following line to /etc/exports:

/media/Storage 172.19.181.0/24(rw,sync,no_root_squash,no_subtree_check)Then finally mount and restart NFS server

cnat$ sudo mount -a

cnat$ sudo systemctl restart nfs-kernel-server.serviceTest to create a file and check if you can access this file from each node. Do take note that if you copy this example from Davin L's The Missing ClusterHat Tutorial, you need to change the the smart quote “ and ” to ASCII double quote ".

cnat$ echo "This is a test" >> /media/Storage/testBefore each of the node can access the NFS share, each node need to have NFS client installed and mount shared storage in each node:

pX$ sudo apt-get install -y nfs-commonCreate the mount folder, using the same mount folder above. If you used different permissions, use the same permissions here.

pX$ sudo mkdir /media/Storage

pX$ sudo chown nobody.nobody /media/Storage

pX$ sudo chmod -R 777 /media/StorageSet up automatic mounting by editing your /etc/fstab and add this line to the bottom:

172.19.181.254:/media/Storage /media/Storage nfs defaults 0 0Then mount the shared drive and check that the file created above is accessible:

pX$ sudo mount -a

pX$ cat /media/Storage/testUp until now, we are still have not configure the Pi(s) into a cluster, it is just a bunch of computer connected together with SSH and shared folder with NFS. I think we have covered the prerequisite before we actually start to configure the cluster in the next post.

Additional Info

Under Construction.